[This page is auto-generated; please prefer to read the article’s PDF Form.]

The algorithm may have been discovered multiple times independently, but appears to be first published in 1956 by D. R. Morrison [1]. Morrison provided a generic algorithm to find inverse of any function which meets certain conditions, 2y being one such function. Note, log 2(2y) = y, so the inverse of 2y is the log 2 function.

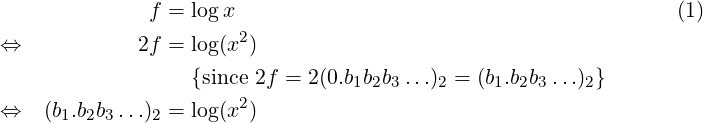

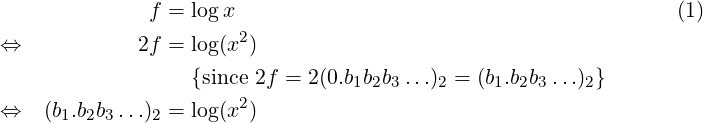

Since 0 ≤ f < 1, it can be written in binary form as: (0.b1b2b3…)2, where each bi is a bit (0 or 1) at position (−i). The algorithm makes use of the below observations:

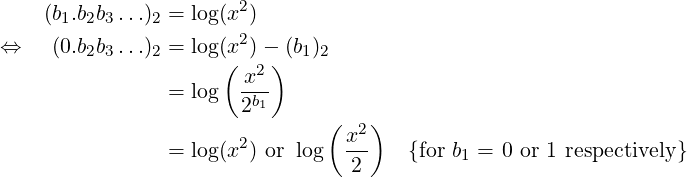

So, x2 ≥ 2 implies b1 must be 1, and x2 < 2 implies b1 must be 0. Thus, b1 can be deduced just by comparing x2 with 2. Further,

If we now consider (0.b2b3…)2 to be our new f, and x2 or x2∕2 (based on b1) to be our new x, then above relation translates to f = log x, which is same as (1). Thus, bit b2 can be found by the same process we used to find b1. Repeating this process m times will give us m bits of f up to bm, which we can combine to give us an approximation for f as (0.b1b2b3…bm)2.

Below is an implementation of this algorithm in C. Input x is assumed to follow 1 ≤ x < 2. m specifies the number of bits to generate for f.

There can be other variants of this algorithm. For example, we can write it for computing logarithm in any base b (log bx). For that, the comparison x2 ≥ 2 needs to be replaced by x2 ≥ b to deduce the bit. And for the new x, division x2∕2 needs to be replaced by x2∕b.

Another variant is to treat f in any other radix d as (0.d1d2d3…)d, where each di is a digit in radix d. Then, instead of generating f one bit at a time, we can generate it one (radix-d) digit at a time. For that, both sides of (1) are multiplied by d, and instead of x2 we need to compute xd. Then, we need to find digit d1 such that 2d1 ≤ xd < 2d1+1. After finding d1, we will compute xd∕2d1 instead of x2∕2b1 for our new x.

Copyright © 2021 Nitin Verma. All rights reserved.